Overview

Because of reasons, I felt that I have to materialize my ideas before they drive me crazy.Gleitschirmfliegen The Game is one of my biggest projects in the past few years. In total it took me ~270 hours for editing, plus ~400 hours for rendering (including tests).

The development process is a mixture of learning, having fun and self torturing. Quite a few things should be worth a few notes.

Intro & Credits

Intro and Credits are the easiest and most fun sections to make. Shotcut is my video editing tool of choice, because it's free and it supports webvfx. Since last year I've been experimenting with webvfx for the telemetry overlay of my pargliding videos. There are issues here and there, but overall it's a great tool.

I tried two new video filters, Sketch and Cartoon. Sketch worked well, and I applied it to the video. Cartoon didn't.

Spiral

The spiral scene is apparently the most important one. At some point I came up with this joke idea to make my paragliding video look like a game. The original idea was just to add some HUD telemetry info (like in racing games) plus some instructions (e.g. "Hold RT for right brake").

This idea was funny, but not exciting enough for me to actually take further actions. But somehow it got developed in my head by itself, and the virtual hoops just "faded in" to the scene.

This hoop thing actually came from my early imagination of the paragliding examination. I had thought that the participant has to fly through some carefully positioned hoops in order to get a pilot license. And of course I had been wondering how on earth the paragliding association could set them up. Yeah I know, I played too many games.

This hoop thing actually came from my early imagination of the paragliding examination. I had thought that the participant has to fly through some carefully positioned hoops in order to get a pilot license. And of course I had been wondering how on earth the paragliding association could set them up. Yeah I know, I played too many games.

- Determine the telemetry data (e.g. speed, altitude)

- Determine the position and attitude of the camera

- Design the visual effects

- Design the sound effects

1. Telemetry

This includes the map and the gauges (speed, altitude etc.) in the video. Since last year I've been developing the telemetry overlay for my paragliding videos. It took me one or two months to finish, and I'm happy with the result.

I modified the overlay for this video, which works well.

In general the whole telemetry pipeline includes:

- Modified gpmf-parser for extracting telemetry from GoPro videos.

- Modified canvas-gauges for the gauges

- Shotcut/melt for combining the video and the telemetry overlay

- Scripts to automate everything

GoPro Data

GoPro Hero 5 records GPS data, as well as accelerometer and gyroscope readings. Only GPS data are used in my overlay, including 3d position, 3d speed and 2d speed. I managed to compute acceleration based on these data, which turned out to match with the accelerometer readings quite well.

Cesium and swisstopo data

In my original overlay, there are both a 3d map and a 2d map. This requires terrain data (altitude of each point), imagery data (for 3d map) and terrain map (for 2d map).

I was quite impressed when I found all of them from swisstopo. The data is of very high quality and free for personal use. There is even a GeoAdmin API which supports Cesium. The Cesium framework provides a comprehensive set of API for map interaction, map rendering and mathematics. And it's open sourced!

2. Camera Position and Attitude

tldr: I annotated and solved the camera attitude manually.

For VFX I need to know both the position and the attitude of the camera. The camera position is available from GPS data, however the data is only 18Hz, and the accurcay is usually 5-10 meters.

The good news is that since I'm in the sky and everything else are far away, these errors are quite small. Just some simple denoising and interpolation would make the data useable. This is actually my final solution.

The bad news is that the camera attitude is unavailable. I had thought about recovering the attitude with the accelerometer and gyroscopes, but based on some articles I read before, I don't really believe that would work.

Blender Motion Tracking & Camera Solving

I don't remember how I came across those Blender tutorial videos about motion tracking and camera solving. I was amazed by such features of Blender. From the videos it seems that motion tracking should work quite reliably. This was actually one of the most important reasons that I even started making this video.

I attempted to apply this feature on my videos, motion tracking worked well as expected, but camera solving almost always produced a large error. Occasionally I got a good solution for a few consective frames, but the error started increasing dramatically as long as I tried to extend the frame range.

Camera Calibration

Then I learned about camera distortion and calibration. After reading a few articles about camera calibartion with OpenCV, I reckoned "maybe the Blender camera calibration is not good enough, probably OpenCV will do a better job".

There's quite easy-to-use API for camera calibration in OpenCV, although I do have complains about the Python API, those "vector of vector of vector" data types are so cryptic.

I tried both the standard camera calibration and the fisheye calibration with checkboard images. The solve error is sometimes large sometimes small, but the calibrated matrix never helped in Blender.

GoPro SuperView

I also searched for calibration data of GoPro Hero 5, there are some database with Here 3,4,5 with different camera settings, especially with Normal or Wide view angles, but never with SuperView. Only after some readings I realized that SuperView is not an optical distorting at all, but rather totally artificial post-processing.

I didn't find the offical SuperView algorithm, but some relevant articles:

- https://emirchouchane.com/remap2-gopro-360/

- https://intofpv.com/t-using-free-command-line-sorcery-to-fake-superview

I tried both, actually both worked quite well, the converted video didn't look distorted at all. But unfortunately, neither helped with camera solving.

Solving Camera with Perspective-n-Point (PnP)

The good news is that since I'm in the sky and everything else are far away, these errors are quite small. Just some simple denoising and interpolation would make the data useable. This is actually my final solution.

The bad news is that the camera attitude is unavailable. I had thought about recovering the attitude with the accelerometer and gyroscopes, but based on some articles I read before, I don't really believe that would work.

Blender Motion Tracking & Camera Solving

I don't remember how I came across those Blender tutorial videos about motion tracking and camera solving. I was amazed by such features of Blender. From the videos it seems that motion tracking should work quite reliably. This was actually one of the most important reasons that I even started making this video.

I attempted to apply this feature on my videos, motion tracking worked well as expected, but camera solving almost always produced a large error. Occasionally I got a good solution for a few consective frames, but the error started increasing dramatically as long as I tried to extend the frame range.

Camera Calibration

Then I learned about camera distortion and calibration. After reading a few articles about camera calibartion with OpenCV, I reckoned "maybe the Blender camera calibration is not good enough, probably OpenCV will do a better job".

There's quite easy-to-use API for camera calibration in OpenCV, although I do have complains about the Python API, those "vector of vector of vector" data types are so cryptic.

I tried both the standard camera calibration and the fisheye calibration with checkboard images. The solve error is sometimes large sometimes small, but the calibrated matrix never helped in Blender.

GoPro SuperView

I also searched for calibration data of GoPro Hero 5, there are some database with Here 3,4,5 with different camera settings, especially with Normal or Wide view angles, but never with SuperView. Only after some readings I realized that SuperView is not an optical distorting at all, but rather totally artificial post-processing.

I didn't find the offical SuperView algorithm, but some relevant articles:

- https://emirchouchane.com/remap2-gopro-360/

- https://intofpv.com/t-using-free-command-line-sorcery-to-fake-superview

I tried both, actually both worked quite well, the converted video didn't look distorted at all. But unfortunately, neither helped with camera solving.

For usual motion tracking tasks (that I saw in those tutorial videos), the objects in the video are buildings or rooms. And it is unlikely to obtain the actually 3d coordinates of the keypoints. Otherwise the designer can directly reconstruct the scene, which makes motion tracking useless (or at least not as useful).

On the other hand, in the spiral part of the video, you see nothing but rotating landscape. It's easy to obtain the longitude, latitude and altitude of the features, e.g. corners of a building. Meanwhile since they are far away, the usual data error should be acceptable.

OpenCV provides some functions to solve the PnP problem, but unfortunately none of them worked. At that point I ran out of ideas and just gave up. So I decided to just track the camera attitude manually. But before that, I think I found that reason of all these failures:

One day I realized that video stabilization was turned on for my videos. This means that the center of the lens keeps moving across video frames. No wonder the camera solving might work for a few consecutive frames but not for more!

Maybe I can recover the stabilization data from accelerometer and gyroscope readings, but I don't think it would work well.

Manual Tracking

Inspired by the perspective-n-point problem, I realized that the camera attitude can be determined if I know both the 2d (viewport) and 3d (real world) coordinates of at least two points, since I already knew the camera position from GPS.

Because of PnP attempt, I tracked several points in the video and found their 3d coordinates. However I couldn't derived a good formula for solving the camera attitude.

My final solution was to track the 3d position of the "center" (0.5, 0.5) and "center-up" (0.5, 0.75) point of the viewport. With this info, I can compute the camera direction from the camera position and the viewport center, then "center-up" can be used to determine the up direction.

Since both points are in the center, I assume that they are not affected by lens distortion or GoPro SuperView too much.

I made a manual tracking tool with Cesium, which was surprisingly an easy task. Since the camera should be moving along a continuous track, I decided to track every other 10 frames or so. In the end I manually tracked in total ~440 points, which is not that much.

| Manual Tracking Tool |

| Interpolated Data (red: center, yellow: center-up) |

One last thing is to interpolate the camera positions. The source data is 18Hz, but I need 60Hz. I only noticed this after importing the data into Blender.

3. VFX

Blender

This is almost my first time using Blender. Well the real first time was when I downloaded Blender years ago when I heard that it got open sourced. The second time was a few months ago when I needed to paint a UV texture and convert the model format.

This would be the first time that I extensively use Blender, although I mostly used it programatically and I have not touched the modeling part.

The Blender (I'm using 2.79) UI is honestly really anti-human, but still quite fun to explore. Somehow this reminds me of first time when I saw MS paint and played with all the tools.

Python extension is another great important feature to me. Especially as the blender file format is not trivial, python extension is the only way for me to import/export data from/for external tools.

Python extension is another great important feature to me. Especially as the blender file format is not trivial, python extension is the only way for me to import/export data from/for external tools.

With the camera position data, it is not difficult to create all the hoops in position. Although sometimes I had to tweak them a little bit because some data points are not smooth.

There are plenty of tutorial videos out there, and I learned quite a few things:

There are plenty of tutorial videos out there, and I learned quite a few things:

- Particle

- Fire Effect

- Baking

- Compositing

- Rendering Optimization

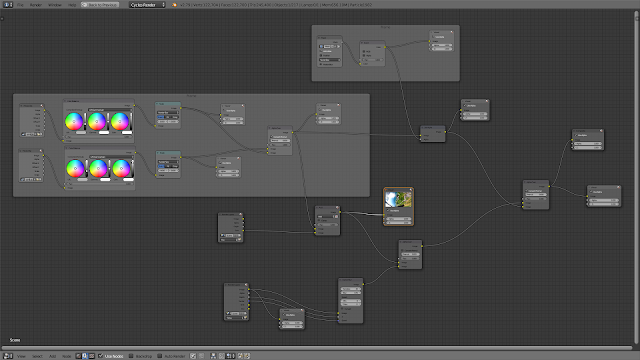

Especially I found the compositing nodes really fun. Here are the compositing nodes for my video:

- Compositing

- Rendering Optimization

Especially I found the compositing nodes really fun. Here are the compositing nodes for my video:

Foreground Background Separation (Masking)

The hoops should be rendered behind the glider, but in front of the terrain. This means that I need to separate the foreground and the background. Like this:

In the beginning I tried to find some algorithms or tools for this task. There are a few functions in OpenCV, which look similar but doesn't fit into my situation, for example, (stable) background subtraction and object identification.

The main obstacles are the rotating background and the unusual distortion (SuperView + stabilization).

In the end I decided to do this manually, which turned out to be super long and tedious.

|

| Masking |

Basically for each frame I need to mark the shape of the glider. There are 2200 frames and in total I need to add ~100k control points. I could make ~100 frames per workday, or 200~300 frames per weekend day. Although this means that I couldn't do anything else.

I decided to skip all the lines except for the brake line. I feel that the final result is acceptable. This actually saved lots of work.

It'd be more complicated if some objects should be rendered in front of the glider (e.g. particles). But I made the hoops large enough such that this situation should never happen.

4. Sound Effects

My friend Andrea kindly helped me with the voice acting. I chose the German voice because that's extra information along with the English subtitle, which should make the video less boring.

Thanks to the YouTube Audio Library, freesound.org and other websites, I was able to find many good BGM and sound effects, except for the hoops, appearing and being smashed.

I learned about this tool "LabChirp". It's a rather small piece of software and I didn't expect too much from it. Surprisingly it keeps a really good balance between easy-to-use and comprehensive features. Very some luck, I was able to find parameters of the sounds in my head.

Summary

I'd give this video a rank of B+, which is the same ranking I gave to my spiral manoeuvre shown in the video. It's probably OK to show this to my friends or to the public, but this is not the best I can do, and the long tedious labor work is not good.

Throughout the video, I paid attention to the information density of each part, it should not be too high or too low, and it should not be constant.

Besides all the technical knowledge that I learned while making this video, I probably also improved my time/project managing skills

Removed Features

1. Before deciding to add the hoops, I spent some time in marking the landing point and making an overlay showing the position and distance. This is similar to the "Task icons" in many games. However it does not fit with the hoops.

2. There is a 3d map in my original telemetry overlay, which shows the estimated attitude of the glider as well as the 3d track. It actually looks very good with normal videos, but here it becomes a little bit distracting and repetitive along with the hoops

3. In early versions of the loading screen (before the spiral scene) I included the Swiss Segelflugkarte and a 3d map of the flying area. But in the end I think the loading screen looks better without them.

What can be improved

1. The editing process took me really too long, there must be a way of shortening it.

2. If I had more time, I'd implement the shadow casting between the glider and the hoops

3. Roughly I made the video in the chronological order, but in the end I felt tired and unmotivated due to the long tedious masking process. One direct consequence is I lost all the exciting feeling while watching the spiral scene, because I have watched this part again and again, back and forth for hundreds of times. This is really bad for creativity. Probably it'd be better if I roughly edit the whole video at the beginning, and then improve each section individually.

Comments